Zero Knowledge Proofs / Secure Computing

Notes and reference for some intro crypt + zero knowledge. IN PROGRESS.

1. Intro

AI inference services require blind trust. Key security risks are privacy of the user’s input and integrity of the server’s output. Ideally, we want private and verifiable computation. The user should send an encrypted question to a trustworthy server which computes and the encrypted response. The user can then decryp the response and verify that it is the intended output of the model, without learning anything else about the model.

Three key capabilities to learn about:

- zero knowledge proofs

- succinct (non-interactive) proofs

- fully homomorphic encryption.

Zero Knowledge Proofs

What is a proof system? The prover has some claim and creates proof . This proof is sent to the verifier, who will run an algorithm to either accept or reject this proof.

In a zero knowledge proof, a prover wants to convince a verifier that a statement is true without revealing secret evidence.

2. Trapdoor Matrices

Frievald’s Algorithm

FACT: For nonzero and uniform random ,

Algorithm: we want to multiply two matrices and check that the matrix returned, , is correct.

- sample random

- server does , returns to us.

- check

- if yes, accept

Now, check with a matrix:

- sample random

- check

- if yes, accept

Pseudorandom matrix

Matrix generation algorithm given a key .

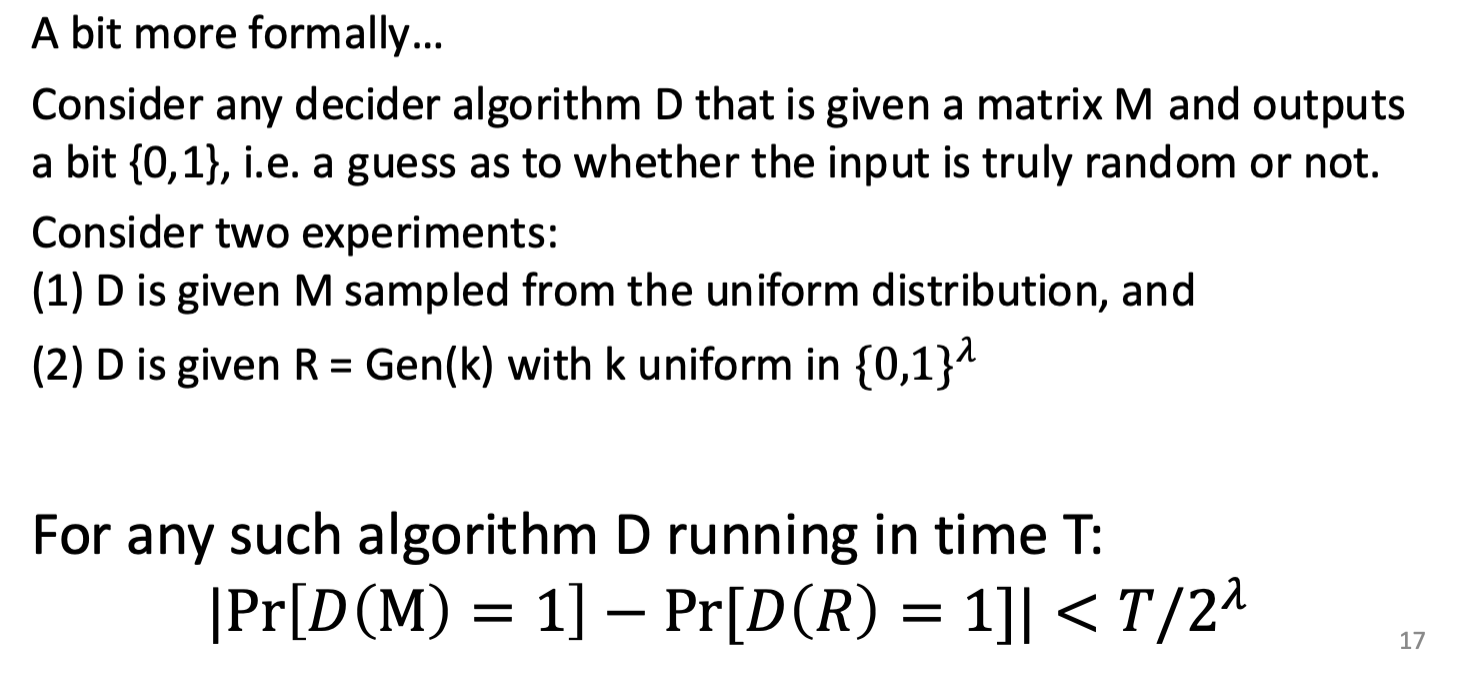

defines a pseudorandom family if, for uniform random key, no algorithm (without the input ) running in time can distinguish the matrix from random with probability better than .

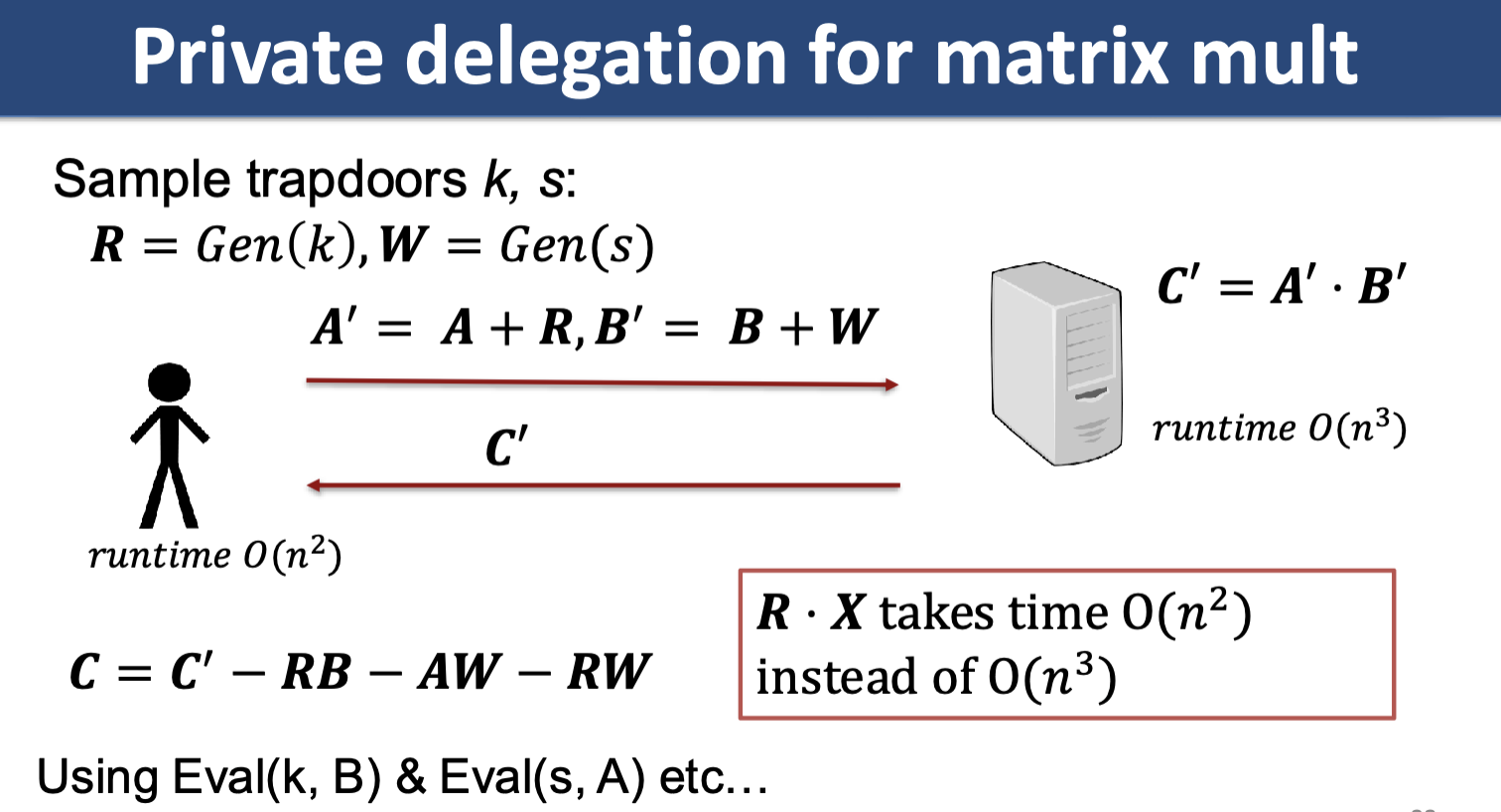

Trapdoored pseudorandom matrix

is a pseudorandom matrix and there is a special algorithm

that given any returns and runs in time .

3. Collision Resistance, Hardness

4. Private, verifiable computation (preliminaries)

Previously: DL and collision-resistant hash from DL

Groups of unknown order

A group where the order is computationally hard to compute, defined by group sampling alg

for a succinct (size polynomial in ) description of a group and an upper bound on its order.

The hidden order assumption holds for if for any polynomial time algorithm and distribution:

- a uniformly distributed group element

RSA assumption (fill in)

GUO with trusted setup: the algorithm is allowed to randomly generate secret values that are discarded.

Ex: samples secret primes and outputs defining the group This group has order , which is easy to compute if can be factored.

Collision resistant hash from GUO

- setup: and .

- hash function: input return .

Collision-resistant based on hidden order assumption: if for then .

Statistical distance

The statistical distance between random variables over a finite domain is :

Sequences of random variables are statistically indistinguishable if

Sequences on the same domain are computationally indistinguishable if for any polynomial time algorithm that receives an input and outputs or :

Learning parity with noise

The LPN assumption over a field has a dimension parameter , noise rate , and number of samples .

The assumption is that a list of samples of the form is computationally indistinguishable from a list of samples of the form where

- and are uniform

- is 0 with probability and otherwise uniform in .

Pseudorandom matrix from LPN

where and and all entries of are sampled as on previous slide.

Each entry of has the form

For each get samples of the form

Languages, NP, and P

A language is a subset of strings

A language is in NP if there exists a deterministic polynomial times algorithm and polynomial such that:

- for any there exists a “witness” of length such that

- if then for any of length .

A language is in P if there exists a polynomial time algorithm such that if and only if .

NP relations

A binary relation is a subset of The language of a binary relation is the set

is called an NP relation if the language is in NP.

How to represent algorithms?

- Turing machines (not useful for proof systems)

- Arithmetic circuit families

- RAM programs

Arithmetic circuits

Fix a finite field for some prime

An arithmetic circuit is defined . It is a directed acyclic graph (DAG) where internal nodes are labeled as or . Inputs are labeled . This defines an -variate polynomial with an evaluation recipe.

usually denotes the number of multiplication gates in .

5. Private, verifiable computation

Representing algorithms (above).

Boolean circuits can be represented as arithmetic circuits over :

- encoded as

- encoded as

- encoded as

Proof verification asymptotically faster than program evaluation = verifiable computation.

Sound: infeasible to produce valid proof if prover doesn’t know program Zero knowledge: proof doesn’t reveal anything about the program

Proof system syntax

- A transcript is a recording of messages between prover and verifier

- Prover algorithm:

- algorithm takes input and witness , internal state , transcript , and produces an updated state and next message, which is sent to verifier.

- Verifier algorithm:

- algorithm takes only input , internal state , transcript , and produces and updated state and next message, which is sent back to prover.

Completeness property

Notation: denotes output of verifier on interaction with prover for input and witness .

For all s.t. , there is a probability 1 that .

Soundness property

Notation: the output of verifier on interaction with an adversarial prover algorithm for input and witness .

For any where there does not exist such that and any polynomial time algorithm , the probability that is boundeed by

Weaker SNARKS with trusted setup

We believe there’s some trusted party that creates the parameters and then disappears. Risky because a broken trusted setup is very bad.

Sometimes we can use multi-party trusted setup.

Types of pre-processing setup:

- trusted setup per circuit

- universal trusted setup (secret part of setup not specific to circuit)

- updatable universal trusted setup (public parameters can be updated by anyone)

- transparent (setup does not use secret data at all)

Completeness property with setup

<P(pk, x,w), V(vk, x)> denotes output of verifier on interaction with prover for input and witness .

For all (finish line from slides)

Soundness property with setup

from slides

Knowledge & Soundness

What does it mean to have knowledge?

Soundness is a aproperty of a proof system that ensures cheaters cannot convince the verifier of a false statement. Soundness if the prover succeeds, there must exist a witness (except with very small probability). This is too weak for some applications.

Knowledge soundness captures the property that the prover must somehow “know” the witness in order to succeed in the protocol.

A protocol is knowledge sound if there exists an extractor algorithm E that can obtain the witness w from any successful prover (informal).

The extractor:

- E can run A for any number of steps

- rewind A to a previous state

- re-run A on different inputs.

For every adversarial prover and input that causes verifier to accept with non-negligible probability, outputs such that with high probability.

6. Proofs/Args of Knowledge, Zero Knowledge

Recap on knowledge soundness.

Proofs and arguments of knowledge

Oracle access to prover: Adversarial prover A is a stateful process that interacts with the verifier (internally runs a modified prover algorithm to get each next message).

If E is an algorithm that has oracle access to A then:

- E can simulate interaction with the verifier for some number of steps, obtaining each message that A sends, but not internal state

- E can rewind to A to a prior internal state, and redo the simulation on new inputs

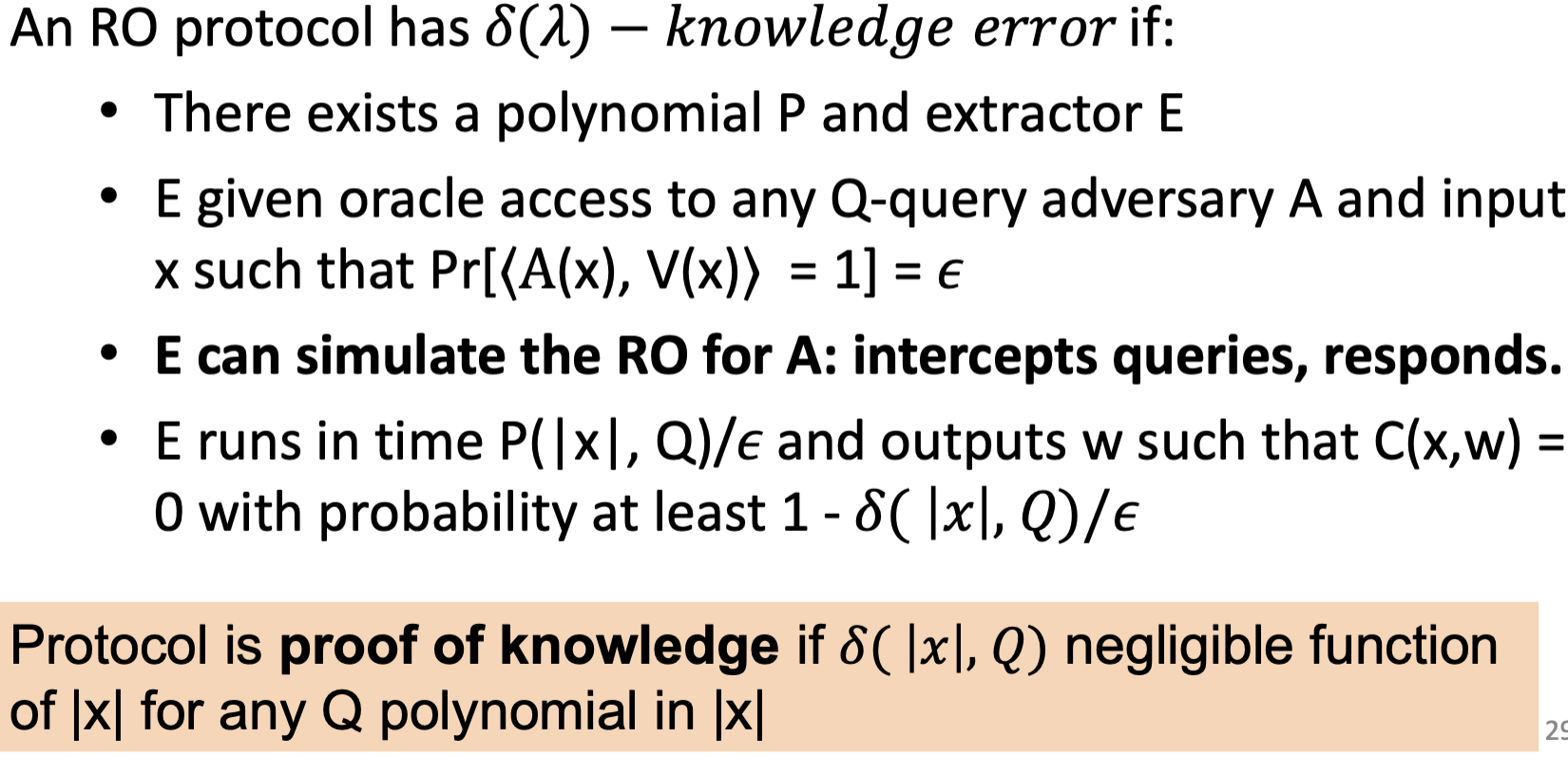

A protocol has knowledge error if

- There exists a polynomial and extractor

- given oracle access to any adversary and input s.t. and

- runs in time and outputs such that with prob at least .

Protocol is called proof of knowledge if negligible.

Argument of knowledge: same definition as proof of knowledge, except extractor is only guaranteed to succeed for polynomial time adversary .

Succinctness, SNARKs

An interactive argument for circuit with gates and setup parameter is succinct if:

- the total communication between prover and verifier over all rounds is

- the cumulative verifier runtime over all rounds is .

Succinct noninteractive arguments abbreviated SNARK. Zero-knowledge SNARK abbreviated zkSNARK.

The differences between SIA and SNARK:

- Non-interactive: There is only one message from the prover to the verifier, a single short proof

- Knowledge soundness: If the proof is accepted, then there is a valid witness that can be extracted (not just soundness, but proof of knowledge).

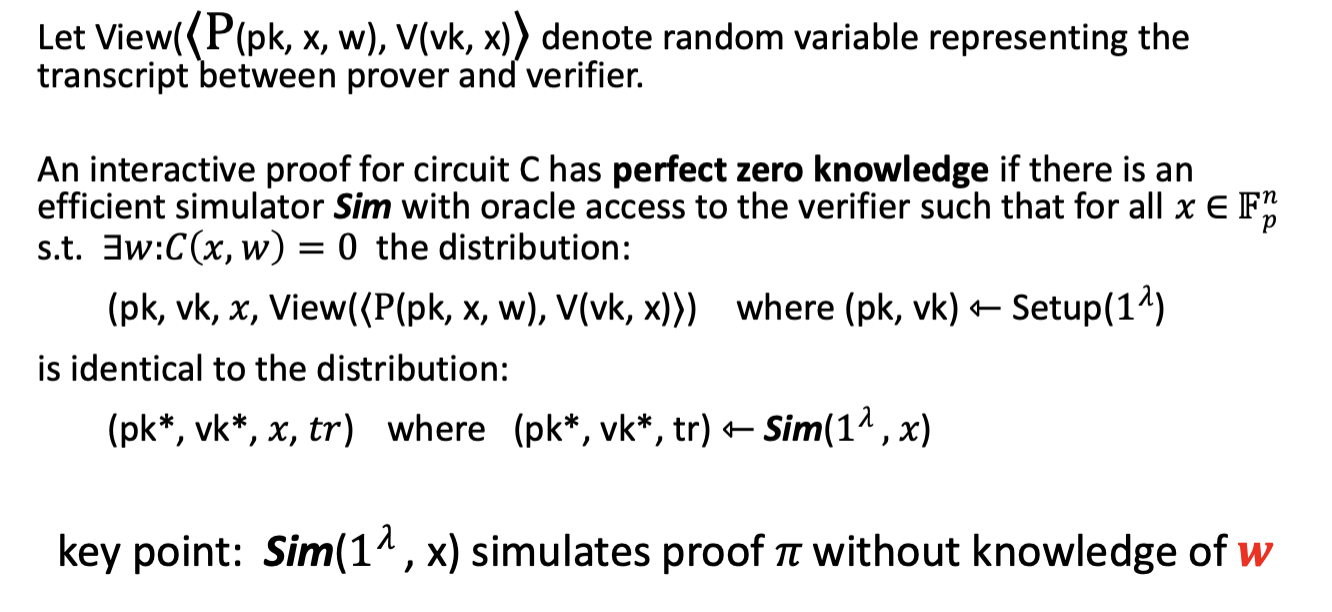

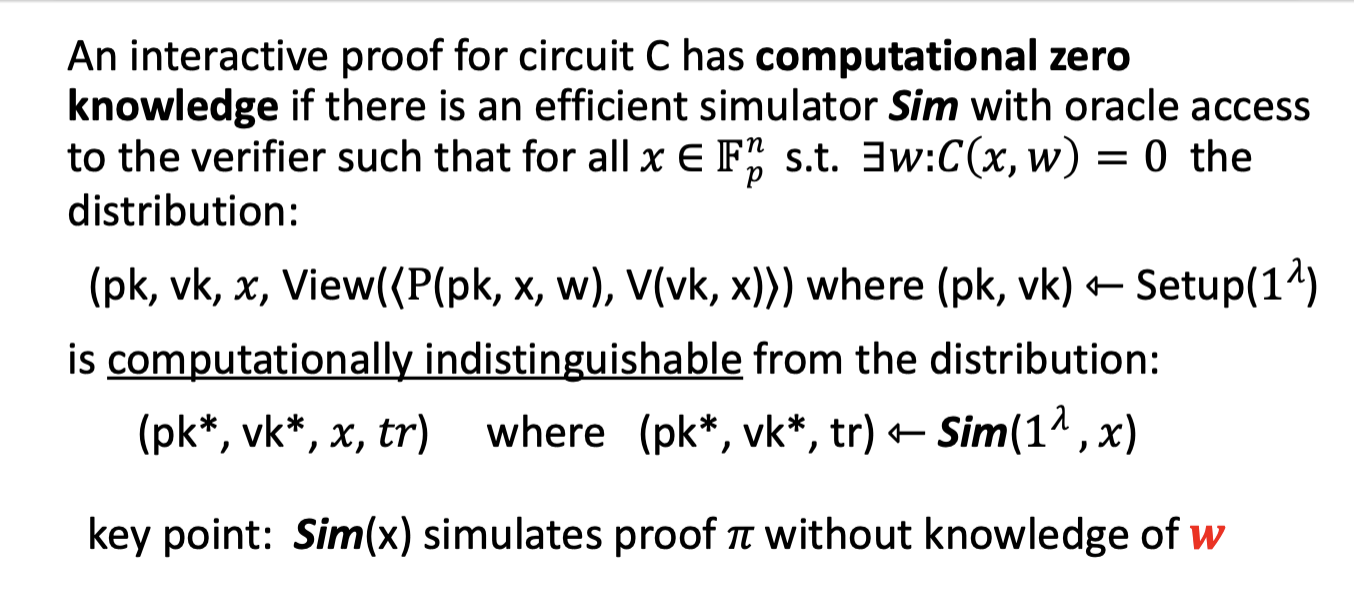

Zero knowledge

- There is a simulator, Sim, that is given oracle access to any adversarial verifier .

- Sim receives the input but not the witness .

- It runs in polynomial time and produces a simulation of the transcript between prover and verifier.

- The simulated transcript is indistinguishable from a real transcript.

Non-interactive zero knowledge

Sim can simulate the proof without the witness . Therefore, the proof reveals nothing about that an observer did not already know before seeing . How?

Clearly the Sim cannot produce a convincing proof , otherwise soundness breaks. Then how can it simulate?

- The proof is not convincing because Sim can “cheat” on the setup.

- It generates parameters and AFTER creating , or by using knwoledge of the secret in its simulation of a trusted setup.

- In other words, Sim has power that the real prover doesn’t have.

7. Polynomial Commitments and Classical ZKP

A polynomial commitment scheme (PCS) provides

- A collision-resistant hash function for polynomials over

- A special proof system for a polynomial input to the hash function

A hiding/ZK PC is:

- Binding: Once you commit to a polynomial, you cannot later change your mind and claim a different polynomial that matches some evaluations.

- This is like “soundness”–it prevents cheating.

- Hiding (or Zero-Knowledge): The commitment does not reveal information about the polynomial except what you later explicitly reveal. This is the “privacy” property.

ZKP of DL

Problem: Given group elements in a group of prime order , provide a ZK PoK of a witness such that ß.

Prover message: sample and send

Verifier challenge: sample and send

Prover response: send

Verifier decision: accept iff

Three round protocol of this form has a special name, the sigma protocol.

- Completeness: The protocol is perfectly complete.

- What about soundness and zero knowledge?

- We only have “honest verifier zero knowledge”

Special Soundness

A sigma protocol is special sound if from any two transcripts and where there is a polynomial time extractor that computes a witness.

Forking Lemma: Every special sound protocol with challenge space size has negligible knowledge error.

Honest verifier ZK

Assuming the verifier behaves honestly, we can simulate:

Simulator:

- sample random and random

- set

- return

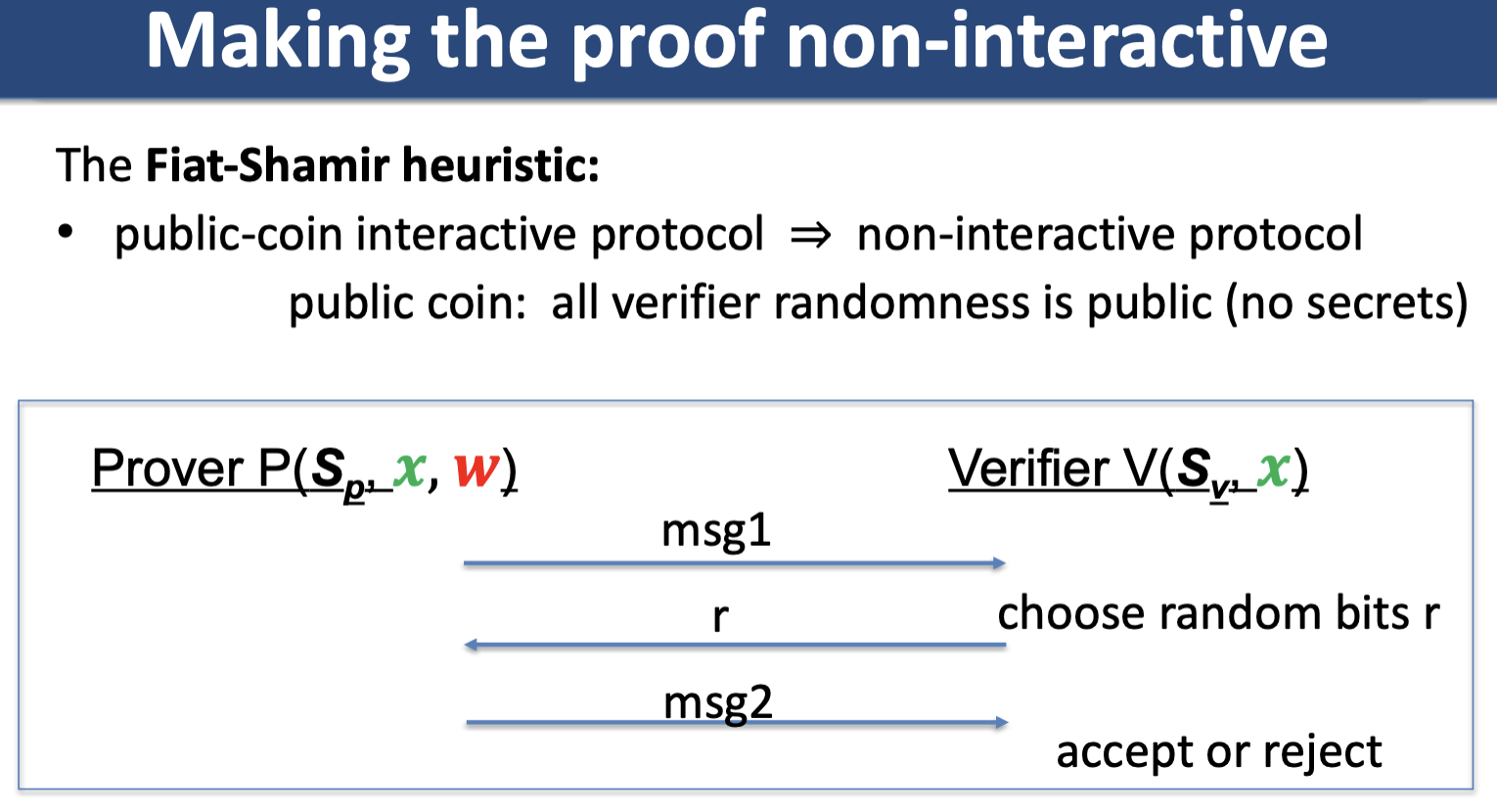

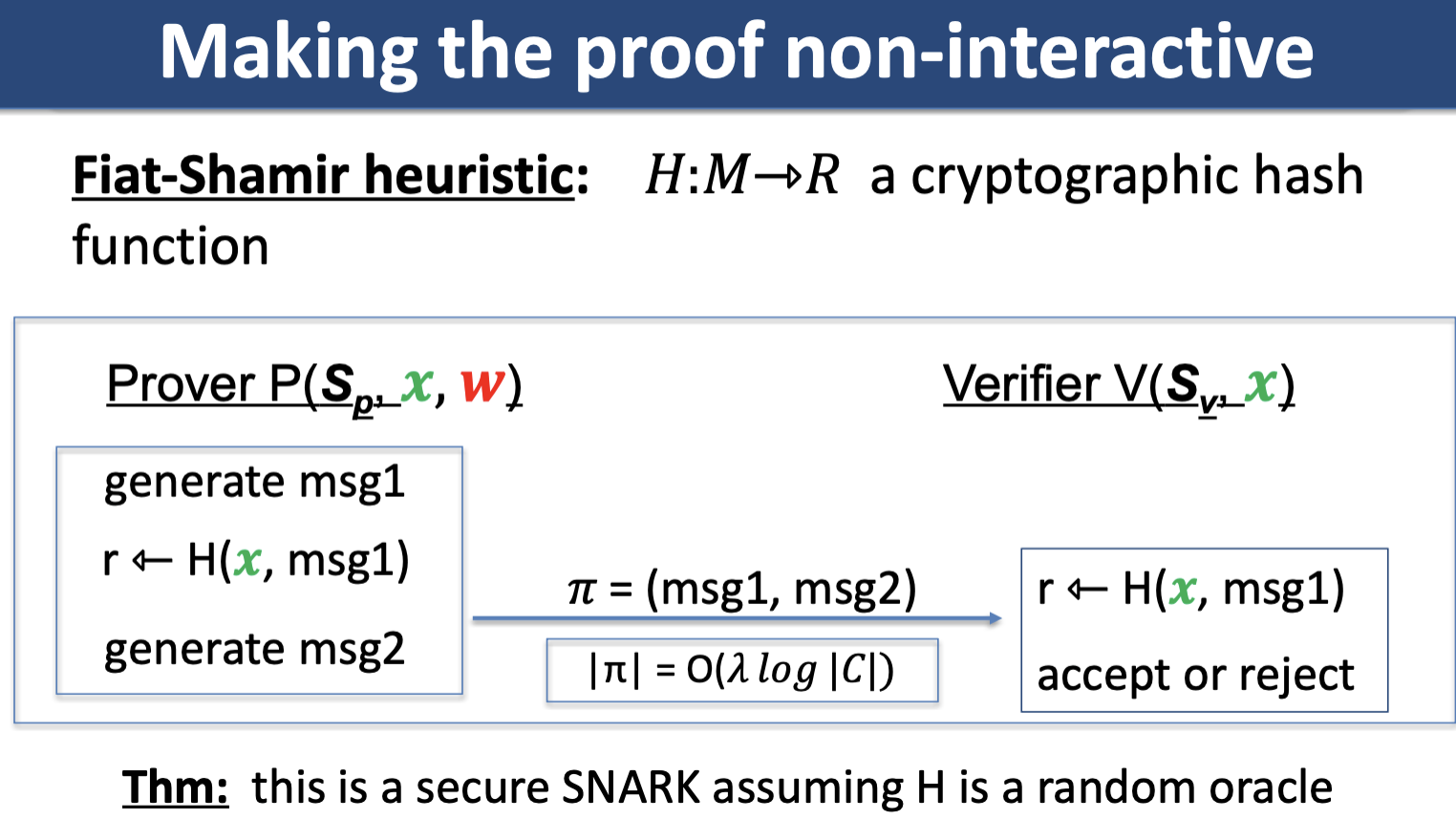

Fiat-Shamir Transform

A technique for taking an interactive PoK and creating a digital signature based on it. For sigma protocol, Fiat-Shamir transformation says instead of having verifier send a random challenge value to the prover, the prover can compute this value themselves by using a random function, leading us to the idea of random oracles.

Random oracle model

In the random oracle model, both prover and verifier algorithms have oracle access to a completely random function .

The random oracle model enables constructions of non-interactive proofs.

How can a simulator simulate a non-interactive proof without knowing a witness? In the random oracle model, the simulator can cheat by “re-programming” the random oracle at points (but must preserve the distribution of random oracle, e.g. re-program a point to uniform random output).

If a sigma protocol is knowledge sound, then its Fiat-Shamir transform in the random oracle model is also knowledge sound.

HVZK to ZK via Fiat-Shamir Transform

Theorem: If a sigma protocol satisfies HVZK, then assuming the random oracle model, the FS transform of satisfies statistical zero-knowledge.

Schnorr Signatures

Apply Fiat-Shamir to ZKPoK of DL:

Public key: where

Signature on message m: s.t. where .

Group homomorphism

A group homomorphism is a function that satisfies for all .

In additive notation, this is linearity:

Correlation intractability

A hash function family with sampling algorithm is correlation intractable for a binary relation if for all polynomial time algorithms and the following distribution:

CI and Fiat-Shamir

A hash family is correlation intractable if it is correlation intractable for all binary relations.

Theorem: If a sigma protocol is knowledge sound, then its Fiat-Shamir transform using a correlation-intractable hash family is also knowledge sound.

Knowledge in Random Oracle Model

A Q-query random oracle algorithm is an algorithm with oracle access to random oracle that makes at most queries to . An RO protocol is a noninteractive protocol between random oracle algorithms.

8. More Polynomial Commitments + Classical ZKPs

FS transform of Sigma Protocols

Multiround Special Soundness

-ary special soundness: given -ary forking tree of proof transcripts for , there is a polytime algorithm that extracts a witness s.t. .

Theorem: A -ary special sound -round protocol is knowledge sound for constant and .

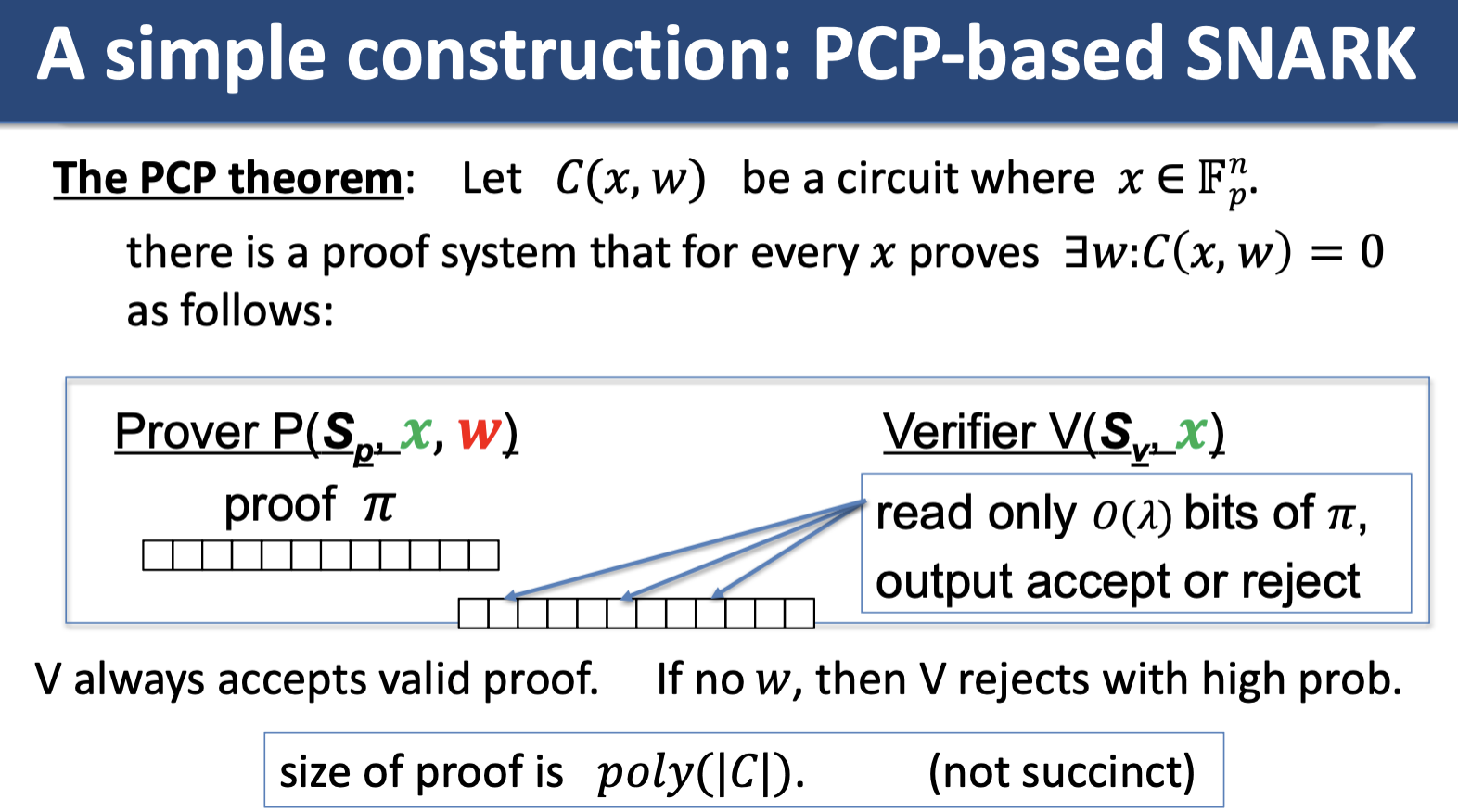

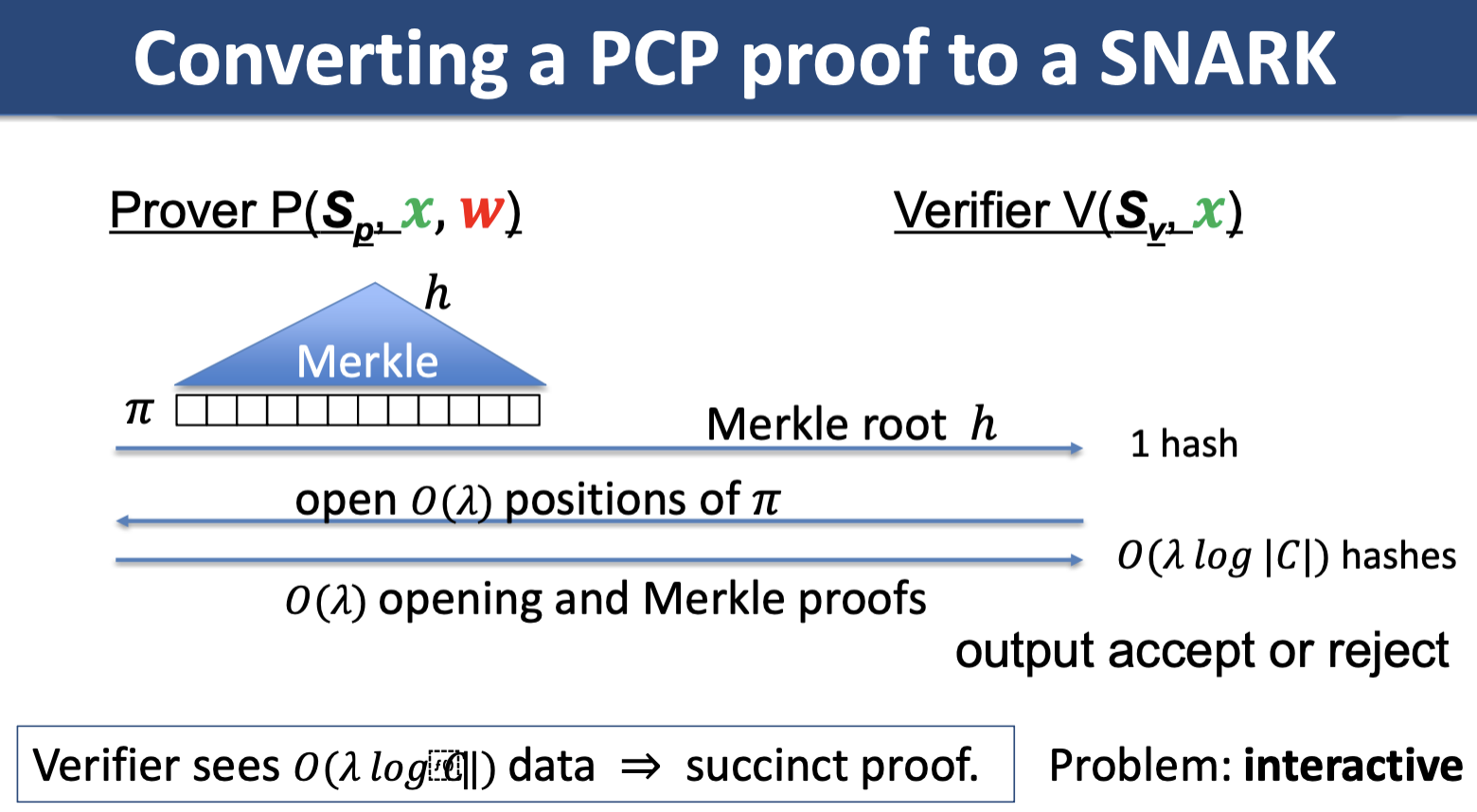

Simple PCP-based SNARK (Kilian, Micali)

Unfortunately, this method is impractical/the prover time is very high. Later: more efficient constructions, constructions that do not require random oracles.

9. Classical Succinct Proofs

None of the classical proofs examined previously have been succinct (communication has thus been proportional to size).

Recall PoK for DL: I know s.t. over . Send , return , send . Size of communication same as size of witness .

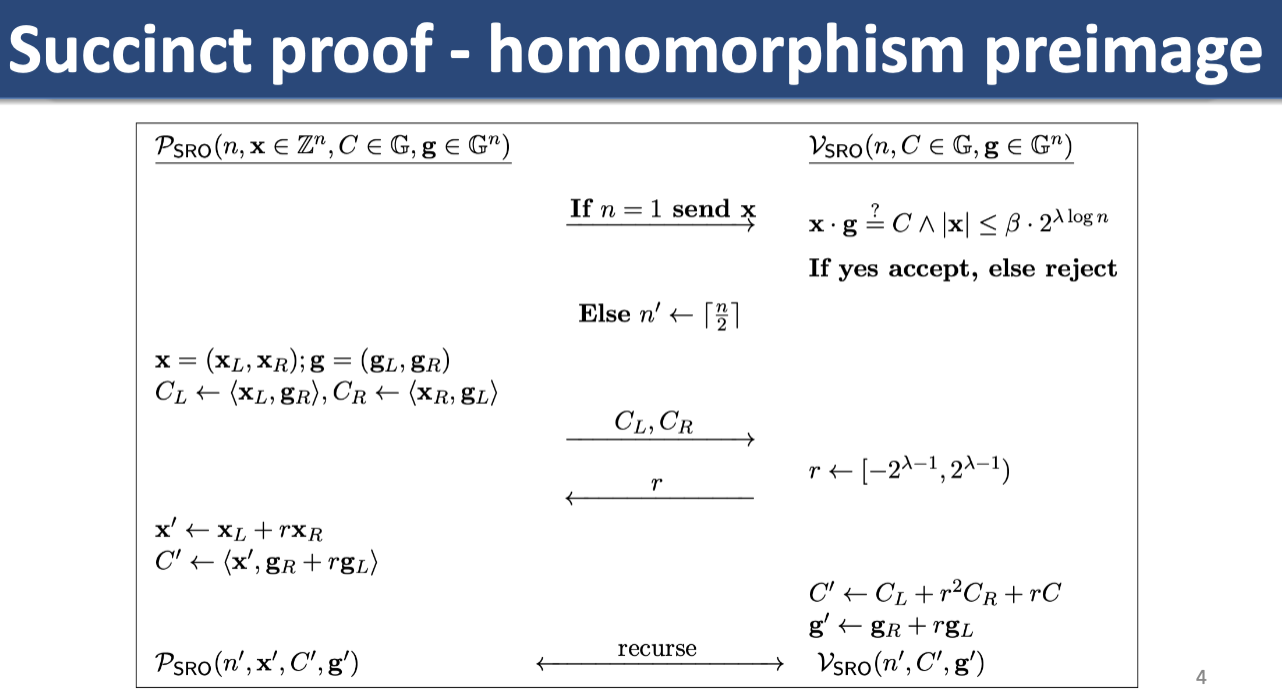

Consider s.t. . A protocol with only bits of communication?

As with Fiat-Shamir in PoK for DL, we can do something analogous for this situation.

Generalizing sigma protocols: Recall sigma protocol for PoK HPI (homomorphism preimage). We have a a homomorphism, s.t. . Generalize mapping from any group to . Prover sends , verifier returns , prover sends back . Verifier checks that (for the group operation).

The problem we’ve posed above is ParseError: KaTeX parse error: Undefined control sequence: \righarrow at position 11: f: \Z_p^n \̲r̲i̲g̲h̲a̲r̲r̲o̲w̲ ̲\G, e.g. with our multiple values we’re mapping vector . Check for the group operation.

This is not succinct!

There are rounds (first round start with , cut in half each round until ). In each round, we send two group elements , so total communication .

Recal Multiround Special Soundness (Lec 8). -ary special sound round protocol is knowledge sound for const and .

10. SNARK Compilers

Compilation paradigm for SNARKs today: arithmetization -> information theoretic pf system -> SNARK.

Interactive Oracle Proofs

Pf systems today generally not built from probabilistically checkable proofs (PCPs) in the classical sense, computationally infeasible (1 round, oracle access to pf).

PCP: 1-round oracle proof, where prover sends poly sized string , and verifier has oracle access to query locations of without reading whole string.

IOP: interactive oracle proof, multi round. Verifier receives new pf strings each round and has oracle access to all pf strings received.

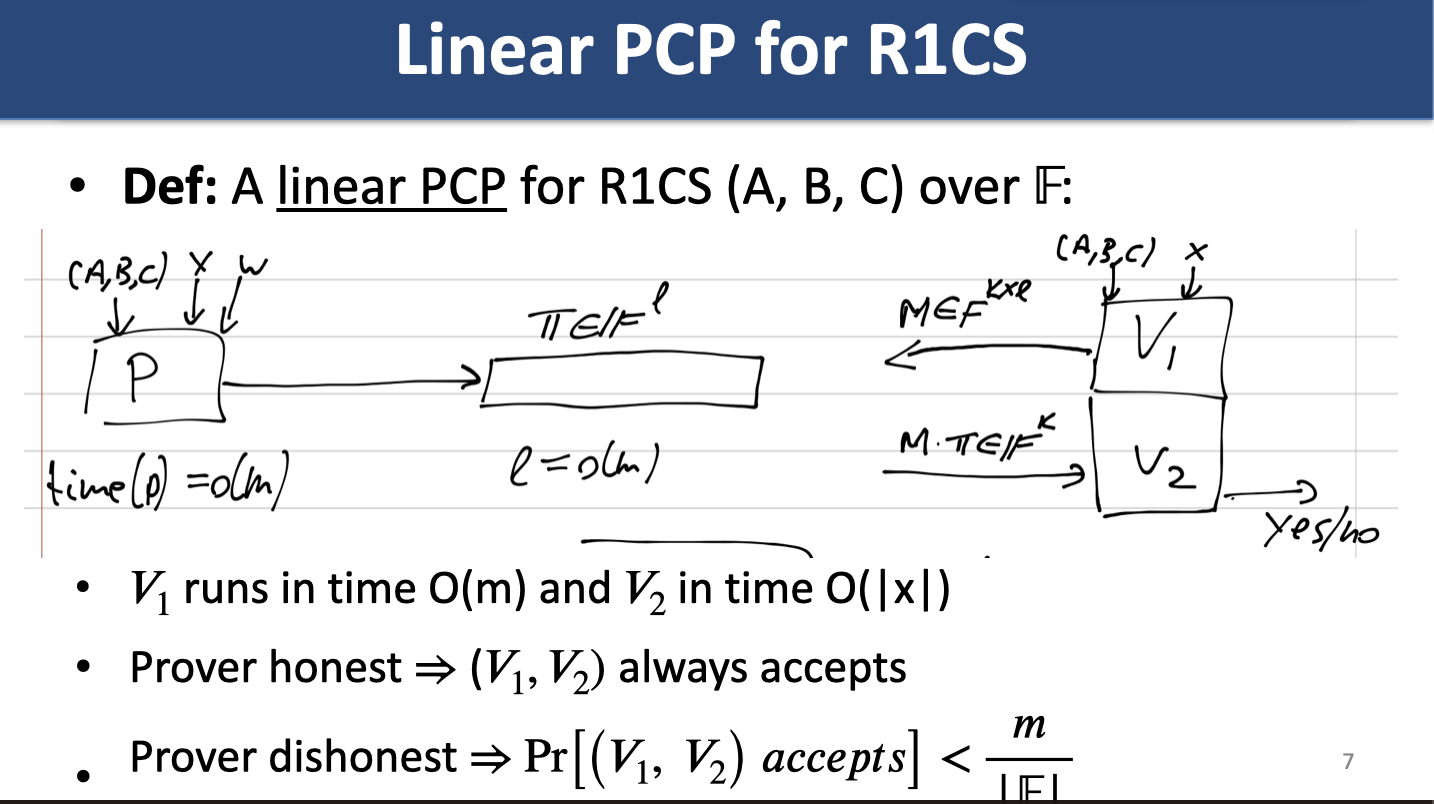

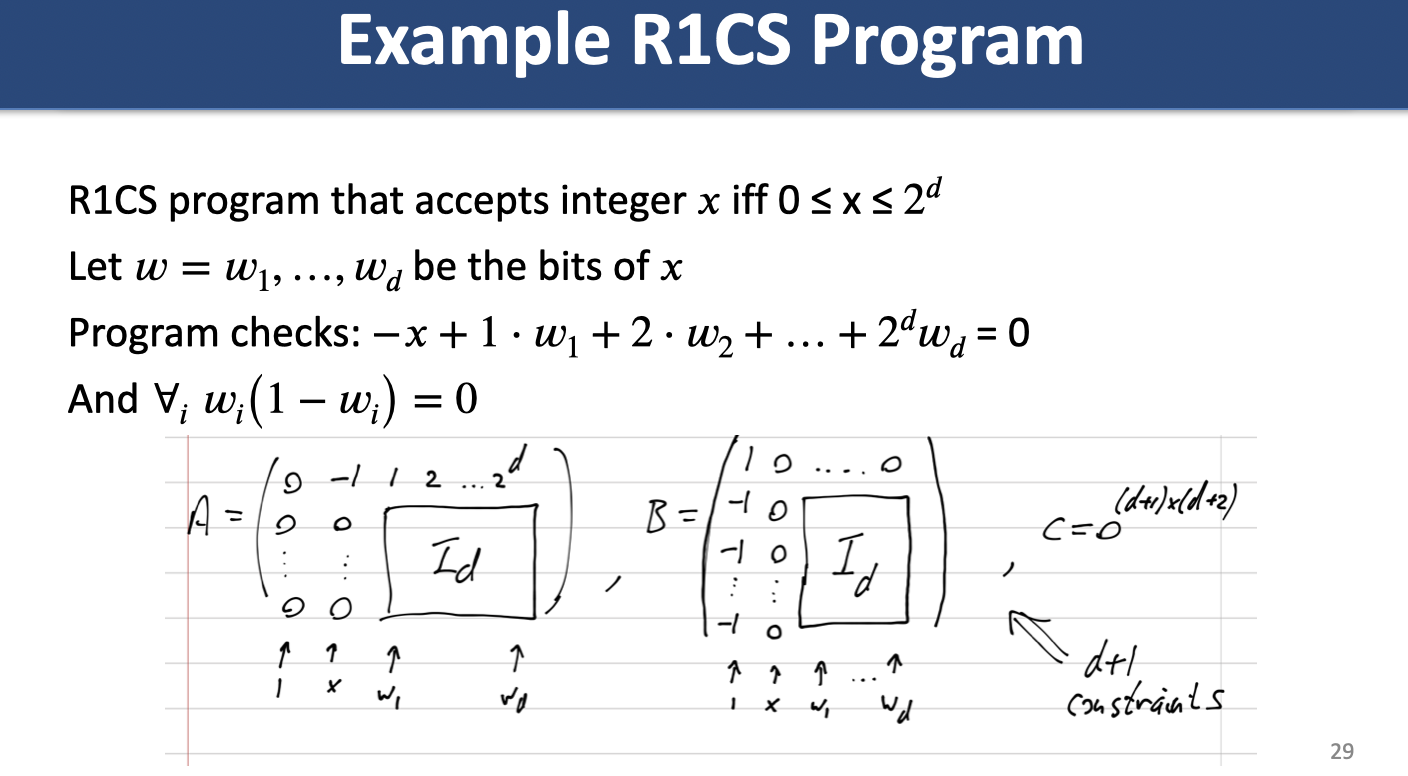

R1CS: we have matrices Input over R1CS program accepts iff

for component wise product (Hadamard).

Linear PCP: PCP is a fn . Equivalent:

- pf is vector over the field

- oracle receives query returns

Ishai, Kushilevitz, Ostrovksy '07 show 4 round linear PCP with linear homomorphic encryption as compiler can convert linear PCPs into SNARKs.

Efficiency of IOPs

- Multiple rounds allow for great efficiency gains over classical PCPs

- Lightweight compilation (Merkle trees, hash functions) compared to linear PCP.

Some modern proof systems building SNARKs off of IOPs,

- STARK (BBHR18)

- uniform programs (many reps fo small unit)

- arg size, fast oepratioins

- sublinear verification for uniform programs

- Aurora (BCRSVW18)

- general circuits, arg size

- linear verification time

Interactive linear PCPs?

Linear IOPs (each round send linear PCP oracle, linear queries to prior oracles sent).

leading to poly IOPs.

polynomial PCP

- pf is a degree d polynomial

- verifier oracle query returns .

- coordinate queries (i.e., read coefficient of ?)

Generic reduction: replace coordinate query with 2-round polynomial IOP.

SNARKS from polynomial commitments: arithmetic circuit -> constraint system, polynomial testing -(using polynomial commitments)-> interactive argument -(fiat-shamir / hashing)-> non interactive proof.

IOPs with Preproc: in a setup phase, a preprocessor outputs several oracles. Any verifier can read the whole oracle to check its correctness. During ‘online’ interaction with prover, it may make queries to the preprocessed code.

Polynomial IOPs

Informally, PIOP efficiency and security: In a PIOP for NP relation with arithmetic complexity (size of the circuit computing ):

- verifier runs in time

- oracles have degree

- prover honest and verifier always accepts

- if and prover does not ‘know’ any witness such that verifier rejects with overwhelming probability

- i.e., accept with probability at most

PIOP not used in practice.

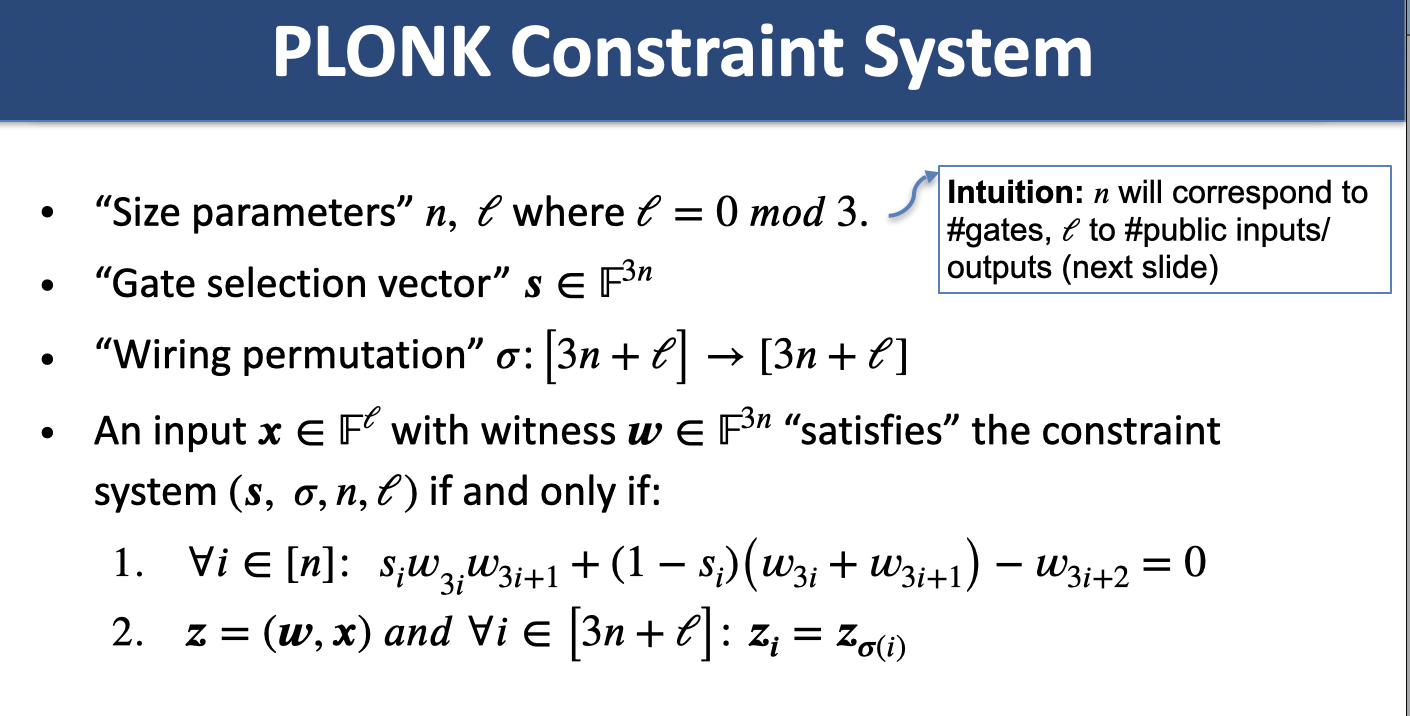

*PLONK: There exists a 3 round polynomial IOP for any NP relation (with arithmetic circuit complexity ) with:

- 2 preproccessed oracles, univariate degree

- 3 online oracles (degree )

- 5 queries with nonzero outputs

- 8 queries overall

Constraint systems

Recall arithmetic circuits (one kind of constraint system). New: PLONK, R1CS.

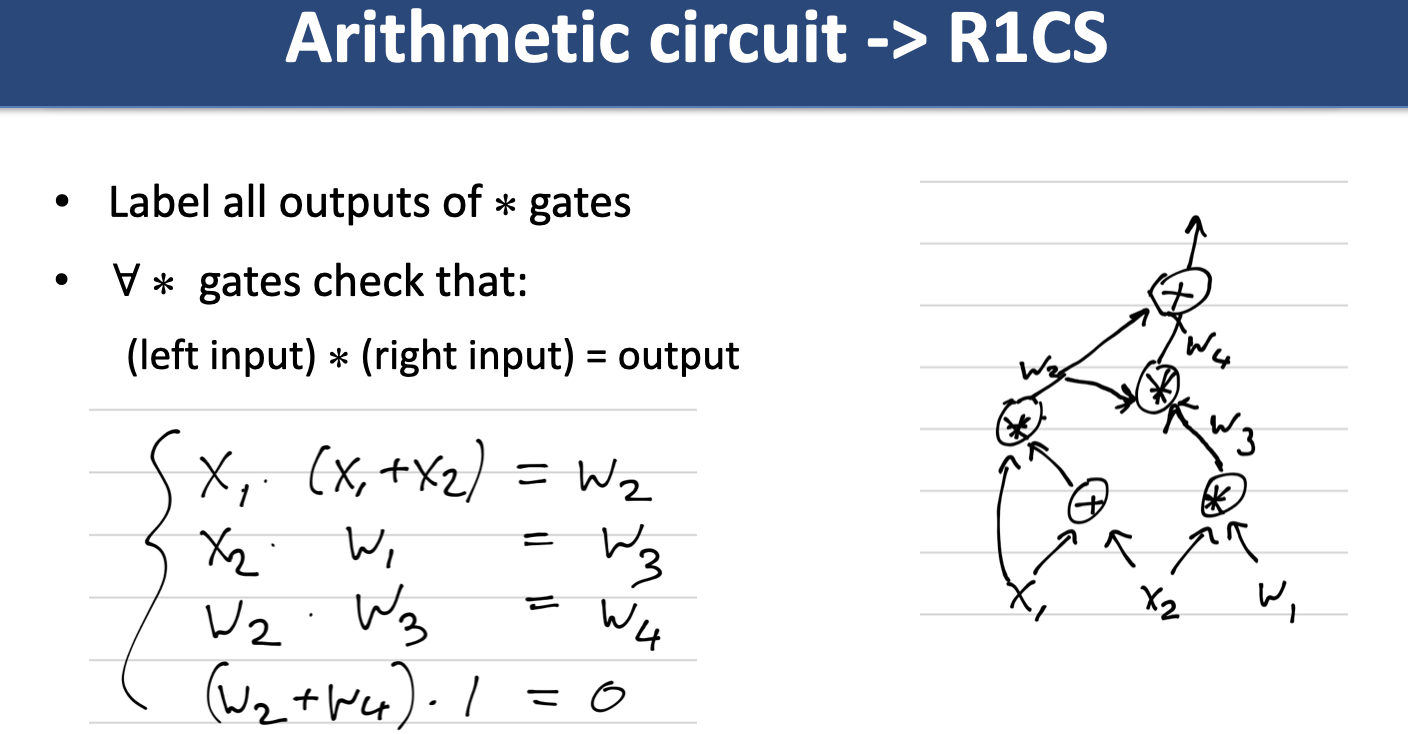

Arithmetic Circuit to R1CS

Theorem: For any circuit , the condition can be expressed as an R1CS program with constraints where ParseError: KaTeX parse error: Expected 'EOF', got '#' at position 5: m = #̲(\text{multipli…

PLONK

11. R1CS Linear IOP to SNARK

Recap on compilation paradigms:

- interactive oracle proofs (IOP)

- compile with Merkle trees + Fiat-Shamir/RO hash

- polynomial IOP

- compile with polynomial commitments

- linear IOPs

- compile with linear only encodings

R1CS to Linear PCP

We have matrices and Define degree polynomial by interpolating Define degree polynomial by interpolating . Define degree polynomial as interpolation of for and for .

Idea: if then ( is Hadamard/comp-wise product). If prover is honest, then as defined above. Linear PCP verifier “checks” at random implies with probability

12. LPCP to SNARK and HVZK

Linear PCP to SNARK

- trusted party runs :

- choose secret random

- (encode individual comps)

- (encode invididual comps)

Idea: prover can only output encodings of linear transformations of queries:

Verifier can check that using QuadTest. Remaining issue: how to force prover to use the same ?

Solution: add one more query that does a random linear check:

- choose random

- query for where

- check that

Prover forced to choose independently of the secret .

Summary:

R1CS to linear PCP (previous)

then linear PCP to SNARK:

- trusted setup S forms the LPCP queries and encodes them

- SNARK prover forced to output affine linear transformations of queries

- extra query forces prover to apply same linear transformation to each query

Proof is 4 elements, verifier does two QuadTests.

ZK Linear PCP

A linear PCP for a R1CS program is zero knowledge if there exists a simulator Sim that takes an input and verifier program satisfying the following:

For all such that such that program accepts and all verifiers V’ making querires M’, Sim outputs:

a random variable distributed identically to from

and .

Honest Verifier Zero Knowledge

HVZK for LPCP: assume verifier follows the protocol, then Sim produces output which is distributed identically to

In transformation to SNARK, our trusted setup selects the query vectors honestly.

R1CS to HVZK LPCP

- Prover samples random

- interpolate at one more point:

- are degree each and is degree

- R1CS constraint equation still equivalent to for , thus implied by

- why ZK? queries reveal no more than and at secret point. These are independent and unfiromly distributed if

implies

(independent, uni random). Also,

13. Polynomial IOP for PLONK Constraints

Construction roadmap:

- arithmetic circuit to

- constraint system to

- polynomial IOP to (via polynomial commitment)

- interactive argument to (Fiat Shamir)

- SNARK

Arithmetic Circuit to Constraints

The input is a 2-fan-in arithmetic circuit with arithmetic gates and input/output wires. There are public I/O wires. Any circuit can be padded with dummy I/O inputs so that mod . Construct

- Associate the indices to gate left-input values, to gate right-input values, to gate output values, and with the public I/O wires.

- Encode gates: set if the -th gate is multiply, else if -th gate is add.

- Encode wiring by defining the permutation as a composition of cycles.

The permutation places toggether in cycles all indices assigned to left-input, right-input, output, and circuit I/O values that are equal according to the wiring.

and satisfy this constraint system iff the ’s are valid assignments to the -th gate’s left/right/output values for all AND the first public I/O values of the circuit are equal to

Constraints to Poly IOP

There’s a lot… Interpolating degree polynomial (selection polynomial), assignment polynomial, permutation polynomial.

Strawman polynomial IOP and efficient permutation argument. Permutation poly IOP, combined poly IOP, linearization optimization.

14. Polynomial IOP for PLONK Constraints, Cont.

Recap: Construction roadmap (arithmetic circuit -> constraint system -> polynomial IOP -(via polynomial commitment)-> interactive argument -(Fiat Shamir)-> SNARK).

Complete SNARK for Polynomial Constraints

Polynomial Commitment

Input: degree at most .

- Setup()

- Commit() for , ideally

- Open()

Public coin interactive protocol (communication sublinear in ):

- Eval()

Commitment is binding and evaluation is binding/argument of knowledge.

Compiling PIOP with Poly Commit

- Prover replaces each oracle of degree with a commitment

- For each query to :

- Prover sends

- Prover/Verifier run Eval(

Polynomial Commitments

- Several options:

- Bilinear group based, trusted setup

- DARK w/RSA, trusted setup

- DARK w/class groups, no trusted setup

- All of these commitment schemes are additively homomorphic

In an additive (homomorphic) polynomial commitment scheme, An additive polynomial commitment scheme can compile any Poly IOP to an argument with just 1 Eval execution.

PCS Batch Opening

Generic optimization for opening at multiple points with homomorphic polynomial commitments:

- Given let and degree . For iff there exists such that .

- To open the commitment at points to output commitment to quotient , derive/receive challenge derive commitment to , and open at point to show

- Verifier uses homomorphism to derive from and .

This generalizes to multiple polynomials at multiple points. Given commits to and ParseError: KaTeX parse error: Expected 'EOF', got '}' at position 17: …{x_{ij}, y_{ij}}̲_{i \in [k], j … for :

- Let and interpolate Define

- Let Note that iff divides each

- To open derive/receive first challenge , compute quotient . Output commitment to .

- Derive/receive second challenge Derive commitment to and open at point to show

Kate Batch Opening

Special case of Kate PCS: batch opening only 1 group element.

- Pairing

- crs = for

- To batch open derive/receive challenge compute quotient Output commitment

- Verifier derives : and Verifier checks the pairing equation

Final SNARK Size

- 3 Commitments for (3 group elements)

- (5 field elements)

- Opening and linear comb of using Eval optimization for multiple polynomials at multiple points

- 1 group element (less efficient verifier)

- 2 group elements (more eff. ver.)

Total size: 4-5 group elements, 5 field elemnts

Original PLONK size: 7 group elements, 7 field elements