<-- back to all linear algebra notes

Notes on Linear Algebra Done Right

note: incomplete and may never be finished. also the formatting is bad because i just ripped this off of my notion

Chapter 1—Vector Spaces

1A

- Definition of complex numbers, sets, tuples

- stands for or (fields)

- defined as set of all lists of length

1B Definition of Vector Spaces

- Vector space is a set with addition and scalar multiplication defined s/t:

- addition commutes + associative

- additive & multiplicative id.

- additive inverse

- distributive property

- If a set, the set of functions from to & is a vector space

- Additive id. and inv. in v.s. are unique

- denotes a vector space over

1C Subspaces

-

a subspace of is a vector space with the same additive id. and operations as on .

- In other words, iff satisfies , closure under addition, and closure under scalar mult

-

Sum of subspaces: for subspaces of ,

and is the smallest subspace of containing .

-

For subspaces of ,

a direct sum if each element can be written in only one way as a sum where each , then denoted as

Direct sum iff the only way to write 0 as a sum is by taking each

-

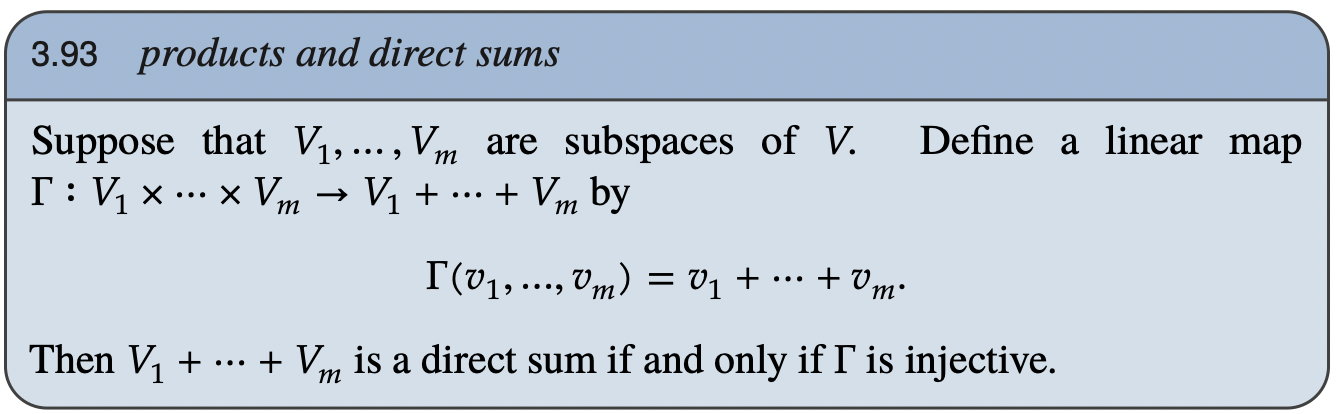

Suppose subspaces of . Then a direct sum .

Chapter 2—Finite Dimensional Vector Spaces

2A Span and Linear Independence

- Defines linear combination, span

- Span of a list of vectors in is the smallest subspace of containing all vectors in the list.

- Vector space is finite dimensional if some list of vectors in it spans the space

- Defines polynomials over , , and polynomials of degree at most over , .

- A vector space is infinite dimensional if it is not finite dimensional.

- A list of vectors in is linearly indepedent if the only choice of s/t is

- Suppose linearly dependent in . Then there exists s.t. .

- If the k-th term removed from , then the span of the remaining list equals the span of the original list

- Every subspace of a finite dimensional vector space is finite dimensional

2B Bases

- Defines basis

- A list of vectors in is a basis of iff every can be written uniquely in the form , where .

- Every spanning list contains a basis

- Every finite dimensional vector space has a basis

- Every linearly independent list of vectors in a finite dimensional vector space can be extended to a basis of the vector space

- Every subspace of is part of a direct sum equal to .

- e.g., sps. finite dimensional and a subspace of . Then there exists a subspace of s.t. .

2C Dimension

- Any two bases of a finite dimensional vector space have the same length

- Dimension of a finite-dimensional vector space is the length of any basis of the vector space, denoted

- Dimension of a subspace of a finite dimensional vector space is less than that of the original vector space

- finite dim. v.s. Then every linearly ind. list of vectors in of length is a basis of .

- finite dim v.s. and a subspace of s.t. their dimensions are equal. Then .

- Every spanning list of vectors of of length is a basis of

- If are subspaces of a finite dim v.s., then .

Chapter 3—Linear Maps

3A Vector Space of Linear Maps

- Definition of a linear map:

- a linear map from to is a function with the following properties:

- additivity: for all

- homogeneity: for all and all

- a linear map from to is a function with the following properties:

- Set of linear maps from to denotes

- from to is

- Suppose a basis of and . Then there exists a unique linear map such that for each .

- Linear maps are closed under addition and scalar multiplication (i.e., summing two linear maps is still a linear map) defined as

- is a vector space with the operations defined above

3B Null Spaces and Ranges

- For the null space of is the subset of whose vectors map to 0 under :

- Null space is a subspace (above, null T is a subspace of V)

- A function is injective if

- . Then injective iff .

- For the range of is the subset of that are equal to for some :

- The range is a subspace (above, range T is a subspace of W)

- A function is surjective if its range equals

- Fundamental theorem of linear maps

- sps. finite dimensional and . Then is finite dimensional and .

- Sps. finite dim. v.s. s.t. dim V > dim W. Then no linear map from to is injective.

- A homogeneous system of linear equations with more variables than equations has nonzero solutions

- A system of linear equations with more equations than variables has no solution for some choice of the constant terms

3C Matrices

- Definition of a matrix

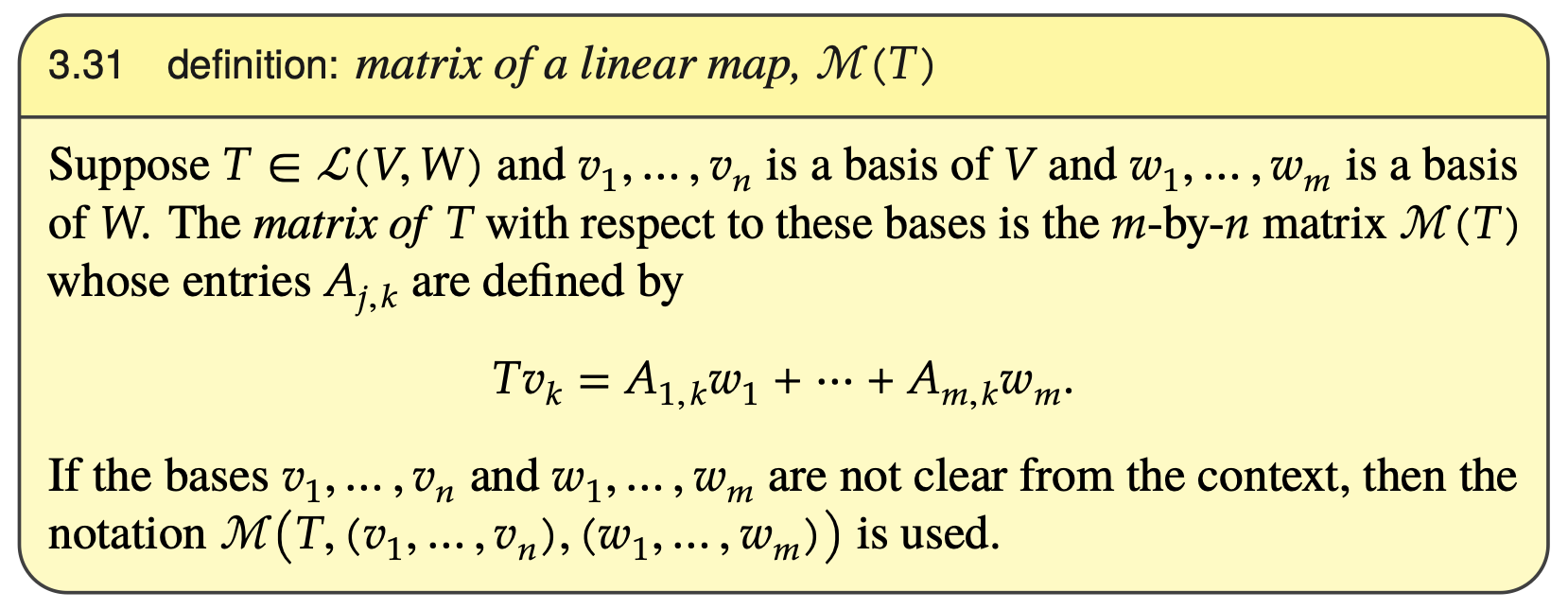

- Definition of matrix of a linear map:

-

If is a linear map from to , assuming standard bases, we can think of elements of as columns of numbers and the k-th column of as applied to the k-th standard basis vector

-

For the rest of the section: assume finite-dim. and with a chosen basis

- Defines matrix addition

- In particular, matrix as the sum of linear maps:

- . Then .

- In particular, matrix as the sum of linear maps:

- Defines scalar multiplication of matrix

- Similarly as scalar times linear map:

- and . Then .

- Similarly as scalar times linear map:

- Notation: for positive integers, the set of all matrices with entries in denoted as

- With addition and scalar multiplication defined as above, a vector space of dimension .

- Defines matrix addition

-

Defines matrix multiplication

- Motivation: matrix as product of linear maps

- If and , then

- (Can use this motivation for understanding when matrices commute, when function composition retains certain properties)

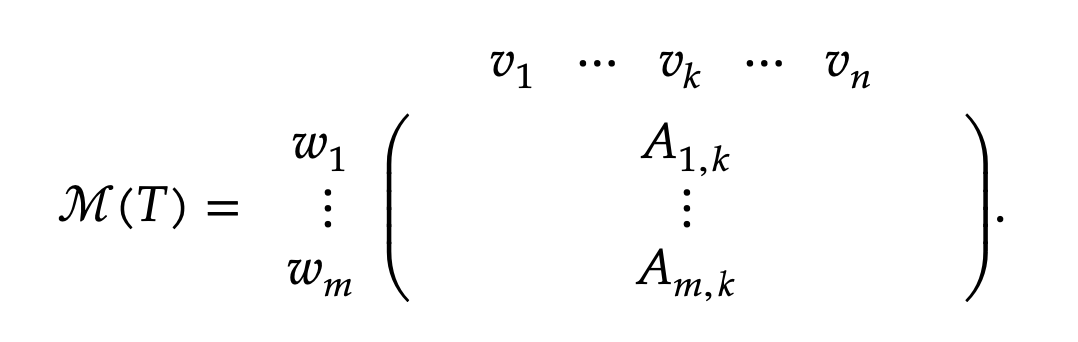

- Ways to think about matrix product entries:

- entry in row , column of is row of times column of

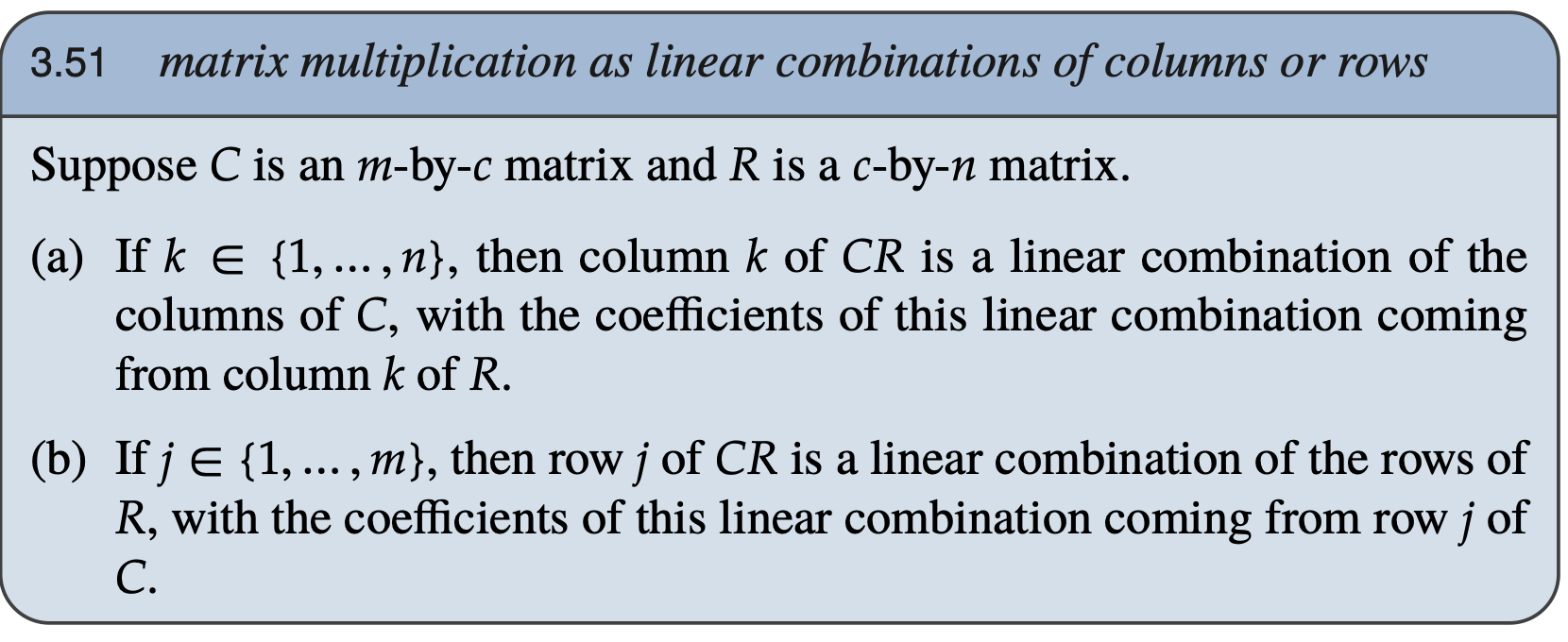

- column of equals times column of

- if is and is with entries ,

-

-

i.e., a linear combination of the columns from and the entries in

-

- Motivation: matrix as product of linear maps

-

Column-row factorization & rank of matrices

- Definition of column and row rank:

- a matrix with entries in

- Column rank is dimension of the span of the columns of in (e.g., at most )

- Row rank is dimension of span of the rows of in (e.g., at most )

- a matrix with entries in

- Definition of matrix transpose

- Column-row factorization:

- Suppose is matrix with entries in and column rank Then there exists an matrix and a matrix , both with entries in , such that .

- Pf. Each column of a matrix. The list of columns of can be reduced to a basis of the span of the columns, a list with length . These columns in the basis can be put together to form . Column of is a linear combination of the columns of . Make the coefficients of this linear combination into column of a matrix, which we call . Then .

- Column rank = row rank = rank of a matrix

- Suppose is matrix with entries in and column rank Then there exists an matrix and a matrix , both with entries in , such that .

- Definition of column and row rank:

3D Invertibility and Isomorphisms

- Defines invertible, inverse:

- A linear map is invertible if there exists a linear map such that equals the identity operator on and equals the identity operator on .

- A linear map satisfying and is called an inverse of

- An invertible linear map has a unique inverse

- Invertibility injectivity and surjectivity

- Sps. are finite-dim v.s. with dim = dim and .

- Then invertible injective surjective.

- Sps also that . Then .

- An isomorphism is an invertible linear map.

- Two finite-dim vector spaces are called isomorphic if there is an isomorphism from one vector space onto the other one

- dim = (dim )(dim )

- Linear maps thought of as matrix multiplication

- Matrix of a vector:

- sps. and a basis of . Then the matrix of w.r.t. this basis is the matrix , where are scalars s.t.

- (if we have a linear map from to , each v.s. with chosen basis, thten the k-th column of equals

- e.g. each column of linear map represented as a matrix is the linear map’s action on the corresponding basis vector in the domain, represented as a matrix

- Hence linear maps act like matrix multiplication:

- Suppose and . Suppose basis for and a basis for . Then .

- Pf. Sps. , with coefficients in . Then (by linearity). Hence (from the above). This equals , as desired.

- Suppose and . Suppose basis for and a basis for . Then .

- Sps. are finite-dim and . Then dim range equals the column rank of .

- Pf. Sps. a basis of and a basis of . The linear map that takes to is an isomorphism from onto the space . The restriction of this isomorphism to range is an isomorphism from range onto span$(\mathcal{M}(Tv_1), …, \mathcal{M}(Tv_n))$. The matrix equals column of .

- Matrix of a vector:

- Change of basis

- Defines identity matrix

- Defines invertible matrices (matrix inverse not introduced with det / computational method)

- Suppose and . If a basis of and a basis of and a basis of , then .

- Essentially same result of linear map and matrix mult., with explicit bases

- Suppose and are bases of . Then the matrices and are inverses of each other

- Change of basis formula:

- Shorthand notation:

- Sps. . Sps and are bases of . Let and and . Then .

- Suppose a basis of and invertible. Then , where both matrices are w.r.t. basis .

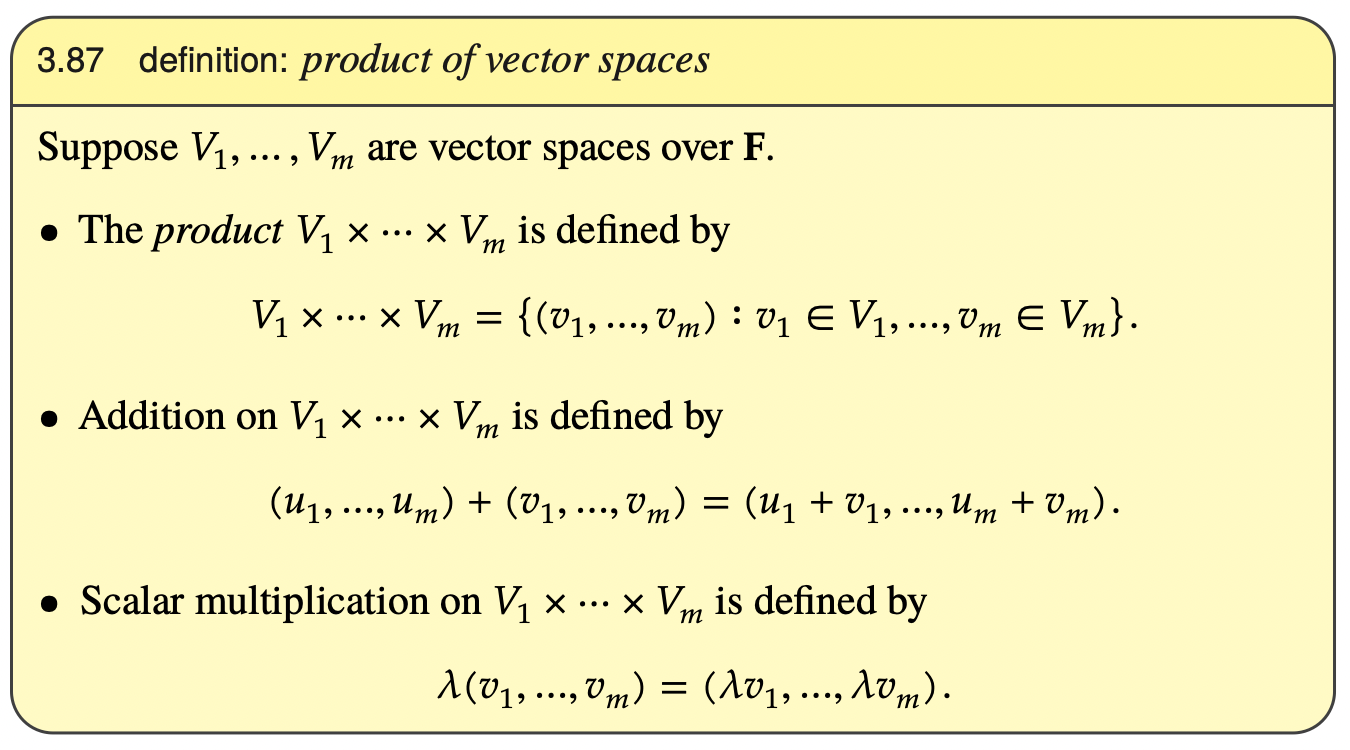

3E Products and Quotients of Vector Spaces

- Product of vector spaces is a vector space

- Dimension of a product is the sum of dimensions

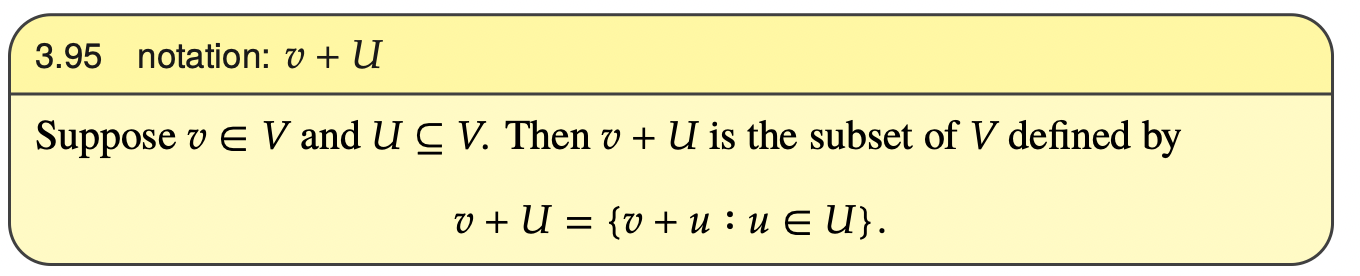

- Definition of a translate:

- Defines a quotient space:

- Sps. a subspace of . Then the quotient space is the set of all translates of . Thus,

- Want this quotient space to be a vector space.

- Sps. a subspace of and . Then

- e.g., two translates of a subspace are equal or disjoint

- Defining addition and scalar multiplication on :

- Sps. a subspace of . Then addition and scalar multiplication defined as:

- Sps. a subspace of . Then addition and scalar multiplication defined as:

- With the operations above is a vector space.

- Sps. a subspace of and . Then

- Defines quotient map:

- a subspace of . The quotient map is the linear map defined by for each .

- dim = dim - dim

- Sps. . Define by .

- , where the quotient map of onto

- is injective

- range = range

- isomorphic to

- Hence we can think of as a modified version of , with a domain that produces a 1-1 map.

3F Duality

-

A linear functional on is a linear map from to (an element of

-

The dual space of , denoted , is the vector space of all linear functionals on , i.e.,

-

Sps. is finite dimensional. Then is also finite-dimensional and dim = dim .

- Pf. dim = dim = dim dim = dim .

-

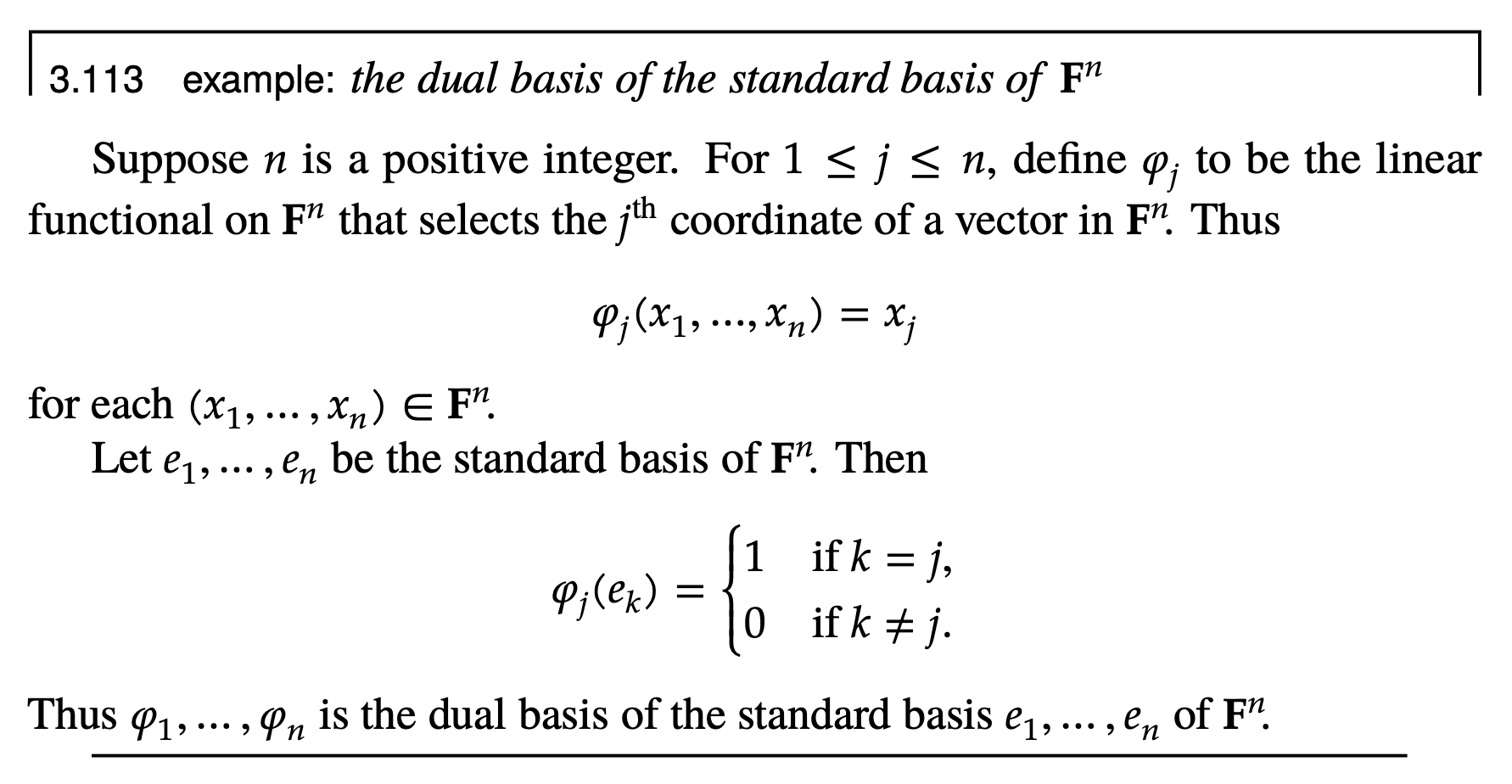

If a basis of , then the dual basis of is the list of elements of , where each is the linear functional on such that

-

The dual basis of a basis of consists of the linear functionals on that give the coefficients for expressing a vector in as a linear combination of the basis vector:

- Sps. a basis of and is the dual basis. Then for each .

-

For finite dimensional, the dual basis is a basis of the dual space.

-

Sps. . The dual map of is the linear map defined for each by .

CH. 3 NOT DONEEEE

Chapter 5—Eigenvalues and Eigenvectors

Standing notation: is the reals or the complex numbers, is a vector space over

Eigenvalues

-

A linear map from a vector space to itself is called an operator

-

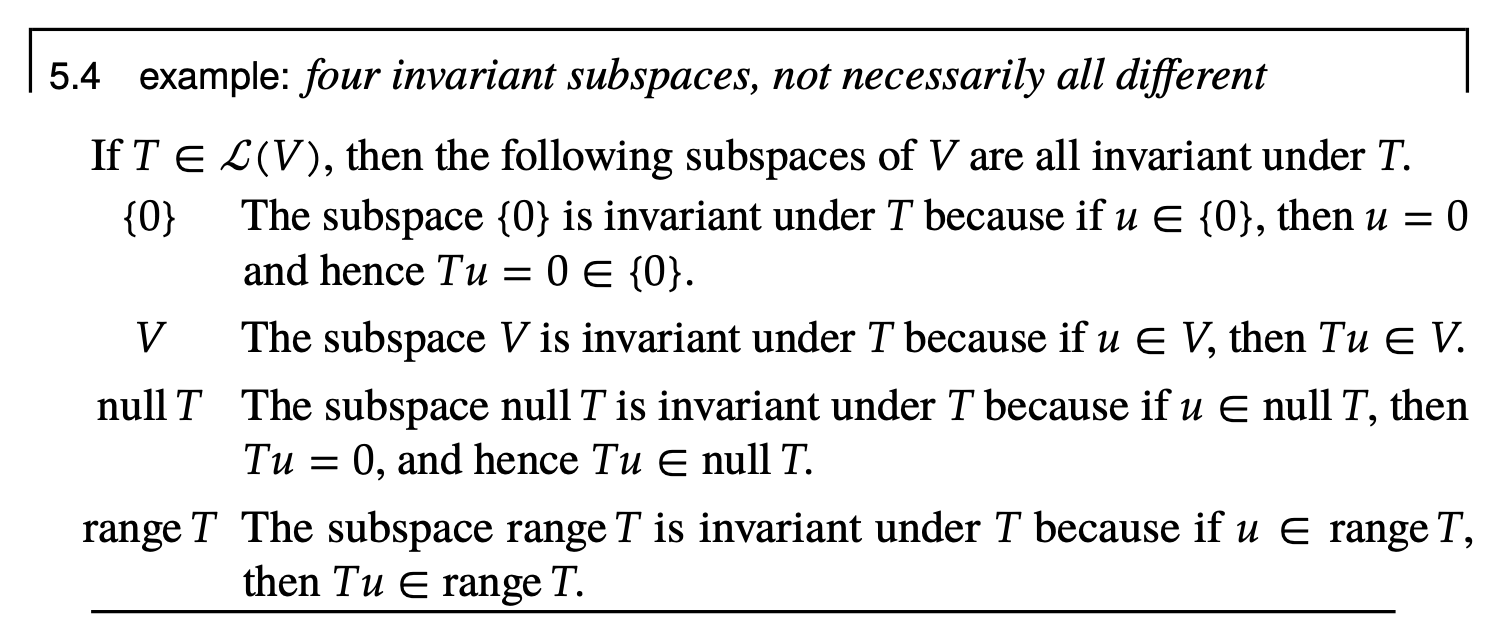

. A subspace of is called invariant under if for every

-

Motivation: to understand the behavior of a linear operator, we only need to understand the behavior of the linear operator restricted to subspaces which partition the entire . However, to apply many useful tools, we want the restriction of T to a subset (say, ) to map back into , hence we would like to study invariant subspaces

-

Thus is invariant under if is an operator on

-

-

The simplest possible nontrivial invariant subspaces (other than and ) are invariant subspaces of dimension 1.

- Take any with a nd let . is a 1 dimensional subspace of (and every 1-dimensional subspace of is of this form for some appropriate ).

- If is invariant under an operator , then , and so there exists a scalar such that .

- Conversely, if for some , then is a 1-dimensional subspace of invariant under .

-

. A number is an eigenvalue of if there exists such that and .

- For an eigenvalue , the corresponding vector s.t. is an eigenvector

-

Every list of eigenvectors corresponding to distinct eigenvalues of is linearly independent

-

For a finite-dimensional vector space , each operator on has at most dim(V) distinct eigenvalues